Software is the key, judging the vehicle based on three characteristics

EyeQ2 can actually handle the following detections (Figure 6) that are necessary for automobile preventive safety systems and collision avoidance assistance systems at the same time: (1) the vehicle in front, (2) the lane line, (3) the distance to the vehicle in front, (4) Relative speed and relative acceleration to the vehicle in front, (5) vehicle in front in the lane, (6) curve, (7) pedestrian.

Figure 6: Detecting vehicles and lane lines

By detecting vehicles and lane lines, the alarm accuracy is improved, and vehicles in the same lane can be distinguished from vehicles in other lanes.

Regarding (1) vehicle detection, the identification of the vehicle is based on the detection of three features, namely the detection of the rectangle at the rear of the vehicle, the detection of the rear wheels, and the detection of the two tail lights (Figure 7). The system will judge whether the object in front is a car by comparing dozens of pre-stored patterns of vehicle shapes. If it is a car, the system can determine the position of the rear tires. The tail light is an important detection element at night.

Figure 7: Capturing features behind the vehicle

By detecting the rear of a rectangular or square vehicle and two tires and tail lights, it is determined that the object in front is the vehicle.

When identifying a vehicle, the width of the vehicle on the CMOS sensor needs to be more than 13 pixels, which is equivalent to 115m from the vehicle with a width of 1.6m. Judging from the performance of the CMOS sensor, the front recognition limit is about 90m.

Monocular camera can also measure distance

(2) The detection data of lane lines will be used for distance monitoring and warning, and collision warning of vehicles ahead. A lane line with a width of 10 cm at a distance of 50 m is equivalent to 2 pixels on the CMOS sensor. The system will recognize the lane line from the camera image, calculate the width of the lane line and its relative position to the vehicle based on the camera's field of view and the position of the front window, and use Kalman filters to estimate the lane line.

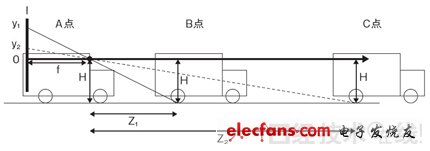

(3) The distance to the vehicle in front is calculated using the "distance and distance method principle" (Figure 8). The principle is as follows. Because the ground height (H) of the camera is known, the position of the vehicle in front of the road contacting the ground is a few degrees lower than the point of infinity (Focus of expansion) on the horizontal plane.

Figure 8: The principle of distance detection

The monocular camera detects the distance between vehicles using the "far-near method principle". The ground height H of the camera is measured in advance, and the distance Z is calculated according to the relationship formula.

The height (y) of the image mapped on the CMOS sensor inside the camera varies with the distance from the vehicle in front (the contact surface between the rear tire and the road). The focal length (f) of the camera is also a known condition. According to the calculation of "H: Z = y: f", the distance Z from the preceding vehicle can be obtained. In addition, the time to collision can also be obtained from the formula of "Z / relative speed".

Overcome the problem of detecting pedestrians

Compared with vehicle identification, (7) pedestrian detection is much more difficult. Pedestrians are different from vehicles. There are many elements that change movement, clothing, and body parts, and they need to be distinguished from background patterns such as buildings, cars, telephone poles, and trees on the street.

In order to minimize the detection delay when someone walks toward the driving lane, the detection of pedestrians outside the lane is essential. Therefore, a certain degree of misjudgment can be tolerated.

Improving the accuracy of pedestrian detection relies on two steps: "single frame classification" and "multi-frame authentication". Firstly, "single frame classification" is used to determine "it seems to be a pedestrian", and then it is transferred to "multi-frame authentication". When a pedestrian is detected to enter the lane, the level is increased, and an immediate alarm and other processing methods are adopted.

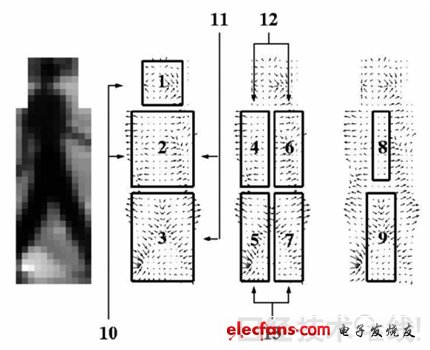

In pedestrian recognition, the system will divide the image presumed as a pedestrian into 9 areas to extract features (Figure 9), combined with the dynamic changes of multiple frames to improve accuracy. The recognized long rectangular pedestrian image with VGA resolution will be adjusted to 12 & TImes; 36 pixels and enter the "single frame classification" process. It is classified based on a test data set that aggregates 150,000 broad pedestrian cases (taking into account motion and pauses, lighting, background patterns, meteorological conditions, and visual conditions under the weather, etc.) within a range of 3 to 25 meters (Note 3).

Figure 9: Extracting pedestrian features

Divide the captured image into 9 areas to extract features and identify whether it is a pedestrian.

(Note 3) The figures are based on the paper published by Mobileye, and may differ from the current product parameters.

The test data set is based on data obtained after driving for 50 hours in urban areas of Japan, Germany (Munich), the United States (Detroit), and Israel. The detection rate of this method can reach 90% when the misjudgment rate is 5.5%. Considering the balance between the detection rate and the misjudgment rate, the final detection rate is 93.5%, and the misjudgment rate is about 8%.

For cases where “single frame classification†cannot be detected, in the step of “multi-frame authenticationâ€, dynamic pace and motion detection, re-detection, and foot position detection are taken into consideration in order to distinguish pedestrians from stationary objects in the background (wire Poles, trees, guardrails). With the above improvements, the system can identify pedestrians with a height of more than 1m in front of 30m during the day.

Weaknesses unique to the camera method

Our anti-collision alarm system also has weaknesses. Because the image recognition is based on the camera, things that cannot be seen by the human eye cannot be recognized. When recognizing lane lines, faded lane lines and lane lines that are difficult to recognize under rain and snow may not be recognized.

Near the entrance and exit of the tunnel, the overlap of the vehicle in front of the tunnel and the image of the tunnel may also cause false alarms; the reflection of the vehicle in front of the road wetted by rain may also cause false alarms; when the sun is directly in front of the horizon, the camera Sometimes the vehicle in front cannot be correctly identified; moreover, when there are water droplets on the front window directly in front of the camera, the system may have a fisheye lens effect, making a wrong judgment about the size of the vehicle in front (Note 4).

(Note 4) The above are the occasions and conditions listed by Mobileye technical department that may cause misjudgment.

In the future, autonomous driving will also be included

In order to improve these weaknesses, we have begun to improve the image processing SoC. It is currently developing a third-generation product called "EyeQ3" in cooperation with STMicroelectronics. It is planned to start supplying products equipped with the SoC in 2014.

EyeQ3 embeds four "MIPS32 cores" that support multithreading, and each CPU core is equipped with our VMP (vector microcode processor). We plan to flexibly distribute control and data processing to achieve 6 times the performance of EyeQ2.

Moreover, we also plan to support large-capacity image data processing such as multi-camera input. If in addition to the front, the camera can also be installed in the back and side for information acquisition, it should be able to achieve a more reliable anti-collision warning system.

With the integration of cameras and cars, autonomous driving has also entered our vision, and now this technology has been developed. Although there are many problems to be solved, we are actively meeting the challenges every day. In the future, Mobileye will continue to make every effort to develop automotive technologies that are more convenient to the public.

Jilin Province Wanhe light Co.,Ltd , https://www.wanhelight.com